Using artificial intelligence (AI) to design experiments and even replace the "intuition" of chemists in some cases, using robots and other automated technologies to efficiently conduct experiments and partially free the hands of chemists has become the future vision of synthetic chemistry in the eyes of many people.

Synthetic chemistry is a foundational discipline that uses atoms and molecules as "bricks and tiles" to create new substances in the real world. From synthetic ammonia, the cornerstone of fertilizers, to nylon, which started the material revolution, to penicillin, which saved countless lives, every breakthrough in synthetic chemistry is reshaping our food, clothing, housing, transportation, and human civilization. However, today's society has increasingly stringent requirements for the performance of new substances and new materials, and the traditional R&D model that relies on chemists' experience to constantly "trial and error" and manually "shake bottles" needs to improve efficiency.

At the "New Paradigm of Synthetic Chemistry Research - Robotics and AI Integration Seminar" which closed on June 29, top scholars and industry experts including Academician of the Chinese Academy of Sciences Akira Aso and Academician of the Chinese Academy of Engineering Weimin Yang discussed how to use the two powerful technological waves of artificial intelligence (AI) and robotic automation to inject new vitality into the discipline of synthetic chemistry.

The chemist's dilemma: finding your way in an infinite molecular universe

Synthetic chemistry is the science of creating materials. From fertilizers and plastics to drugs that protect human health, they all originate from the ingenious designs of chemists and pharmacists at the molecular level. Akira Aso, an academician of the Chinese Academy of Sciences and a professor at the Department of Chemistry at Fudan University, introduced his team's nearly 30 years of research on the synthesis, reactions, and properties of allenes, showing the chemists' quest to find and construct molecules, and explore and optimize reaction pathways.

The core challenge of these works lies in the vastness of chemical space. Hong Xin, a researcher and doctoral supervisor at the Department of Chemistry of Zhejiang University, introduced at the meeting that the number of small and medium-sized molecules that can be synthesized in theory is as high as 10 to the 60th power, which is an astronomical number far exceeding the number of stars in the universe. In this infinite "molecular universe", finding "new stars" with specific functions and the relationship between them traditionally relies on two paths: experiments and theories.

The first is a top-down experimentally driven model. Like experienced explorers, chemists rely on existing knowledge maps and keen intuition to constantly adjust their routes in practice. Excellent chemists can "iterate" new reactions with excellent performance by adjusting the subtle structure of catalysts or reactants based on limited experimental data. This approach not only relies on a large number of experiments and trial and error, but sometimes also relies on scientists' keen capture of experimental results.

The second "bottom-up" theory-driven model is the path of theoretical and computational chemists. Starting from the first principles of quantum mechanics, they simulate the interaction of molecules through supercomputers and calculate the energy changes of each step of the reaction, thus revealing why the reaction occurs and where the selectivity comes from at the atomic level.

This method is extremely accurate, but at the cost of extremely high computational costs and time. Hong Xin mentioned that if you want to accurately understand the mechanism of action and structure-activity relationship of a catalyst, you may need thousands of transition state calculations behind it. This makes it difficult to guide synthetic decisions in actual scenarios in a timely manner.

In the face of the vast molecular space, these two paths, one relying on experience and intuition and the other relying on computing power and theory, both face huge challenges in efficiency and universality, which creates an urgent need for the emergence of new tools. Among them, using artificial intelligence (AI) to design experiments and even replace the "intuition" of chemists in some cases, using robots and other automated technologies to conduct experiments efficiently, and partially liberating the hands of chemists, has become the future vision of synthetic chemistry in the eyes of many people.

Practice of new tools: the reality of automation and the beginning of AI

Letting "tireless" robots and other automated equipment replace people to do experiments according to set programs can greatly improve the efficiency of experimentally driven synthetic chemistry research. In the industry, the pursuit of efficiency has long pushed automation to the front line of research and development, long before the breakthrough of generative AI in recent years.

Yang Weimin, an academician of the Chinese Academy of Engineering and president of Sinopec Shanghai Research Institute of Petrochemicals, mentioned in his speech that in the field of petrochemicals, the development of a new catalyst used to follow a long cycle of "ten years of hard work". As early as around 2010, Sinopec Shanghai Research Institute, where Yang Weimin worked, cooperated with an American company to introduce a high-throughput technology platform, using robotic arms and precise fluid control systems to replace manual labor to perform massive, parallel experiments.

Researchers can first systematically design thousands of different catalyst formulas through high-throughput computing, and then use the automated platform to quickly screen them, thereby discovering patterns and materials with better performance that traditional "trial and error" methods require a lot of manpower to obtain. Through this working model, they successfully developed a nano-sheet molecular sieve that solved the problem of using refinery waste gas to produce high-value chemicals, and has been successfully applied in many industrial plants across the country.

In industrial research, researchers already have a large amount of data and clear optimization requirements for target reaction laws and material properties, which become the basis for automation and high-throughput calculations. However, for cutting-edge academic research, relevant data is very scarce and experimental directions need to be explored. In these "no man's land", the use of new tools seems to present a different picture.

Last year, under the guidance of Akira Aso's team, Jingtai Technology customized and developed an intelligent synthesis workstation for its laboratory that can conduct ATA reaction research. This workstation can do 48 experiments at the same time, greatly improving the speed of experimental trial and error, accelerating the synthesis and screening of catalysts, and efficiently providing standardized data. It is the application of Jingtai Technology's independently developed intelligent autonomous experimental platform in specific scientific research scenarios.

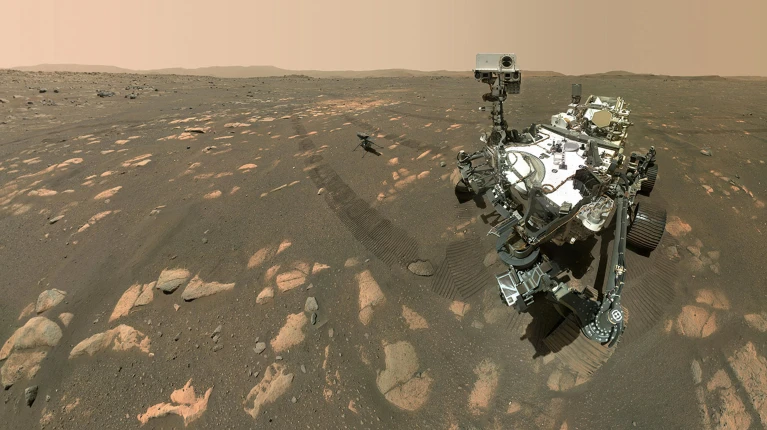

Jingtai Technology's intelligent synthesis workstation. Image provided by the organizer

He said that the efficiency of using the intelligent synthesis workstation as an automated platform for experiments is indeed much higher than the original research model, but the interpretation of these experimental results cannot rely on machines. "Through automation, we have observed some interesting results. But the role played by AI is still very limited and there is great room for development."

He emphasized that the value of AI in the field of chemistry is to become an efficient "helper" for chemists rather than a substitute. "Many people think that AI will replace the human brain, but I personally feel that this is impossible," Aso told The Paper. "Chemical discoveries are often based on some accidental discoveries," and the interpretation of machines trained with existing knowledge often eliminates such accidental discoveries. Therefore, the final scientific insight still needs to be completed by the human brain.

The chemistry of the future: smart models and autonomy

The AI "AlphaFold" developed by Google solved the problem of protein structure prediction with super high prediction accuracy and won the 2024 Nobel Prize in Chemistry. Aso Akira believes that in the field of protein structure, scientists have accumulated a large amount of data through years of exploration, such as the crystal structure and function of known proteins, which has created good conditions for training AI. In many other cutting-edge fields, AI still faces the problem of data scarcity.

Hong Xin's exploration provides a possible path for this. He pointed out that when exploring the structure-activity relationship (the relationship between molecular structure and activity) of a new chemical substance, there may often be only very limited experimental data. Directly training the AI model with these "small sample" data will make it difficult to generalize the model and it will be impossible to obtain predictive designs with chemical significance.

To this end, they designed a "hierarchical learning" framework that allows AI to gradually approach the target structure-activity space, thereby better modeling and predicting. When developing a new nickel catalyst, they first used a large amount of mechanistic related palladium catalyst literature data to train a "basic model" to allow AI to learn the overall selectivity rules for this type of reaction. Then, use a small amount of precious nickel catalyst data to "fine-tune" and "correct" this model to adapt it to the new target system. In this way, they successfully predicted and synthesized a new, efficient and highly selective catalyst ligand, demonstrating the potential of AI to achieve innovative discoveries in a "small data" scenario.

In addition to using "knowledge transfer" to train more accurate AI knowledge models, using AI to make automation more "smart" is also a way to improve the efficiency of synthetic chemistry in the future. Ma Jian, co-founder and CEO of Jingtai Technology, described it as the difference between automation and self-driving.

"Automation is like taking the subway or train when people travel," he explained. "The path between two points is very clear... everyone gets on the train together and goes directly to the destination." In the field of chemistry, automation platforms are good at executing large-scale, repetitive and standardized processes, such as high-throughput screening, testing hundreds of preset formulas at a time. This model is extremely efficient, but lacks flexibility.

Autonomy, on the other hand, is more like a "self-driving car". It can not only perform tasks, but also sense the environment, analyze data, and make decisions in the process. Ma Jian calls it a "Lab Auto-Driving". After completing an experiment, such a laboratory can immediately analyze the results and independently design and start the next, more optimized experiment, forming a fast-iterative "design-execution-learning" closed loop.

Building such an "AI+Robot" autonomous laboratory is the common vision of many companies and scientific research institutions, including Jingtai Technology. Jingtai Technology's intelligent autonomous experiment platform is working closely with scientists and industry practitioners to integrate automated experiment execution and AI experiment prediction and design, and realize efficient "dry and wet experiments" (computational simulation and real experiments) iteration, which has been successfully applied to biomedicine, new materials, new energy and other fields.